In this blog series, The Real Trillion Euro Gap, I compare two developments from 1999 to a preview of 2045:

- A destructive misdevelopment of our society, shaped by short-term interests, and

- A proactively designed digital future that preserves and evolves pre-digital achievements.

For decades, I have attempted to accompany a holistic concept for such a society. But the comparison shows:

A gap of trillions of euros has emerged—as economic damage and as the investment needed to rectify these misdevelopments.

This gap is no coincidence. It is the result of missed opportunities, ignored patents, and a digitization often dominated by autocratic business models.

Yet it is not just about numbers. It is about the question:

What could an inclusive, participatory society have looked like—and how can we still shape it?

A Pedagogical Milestone: The Segmenting Method (1985)

As early as 1985, Ingrid Daniels and I laid the foundation in our diploma thesis for a principle now known in AI as tokenization.

The Segmenting Method was a hybrid, participant-centered approach that broke down words into meaningful, recognizable units—not into letters, but into meaning-bearing segments.

Back then, it was about literacy. Today, this approach is relevant for AI, the Semantic Web, and inclusive education.

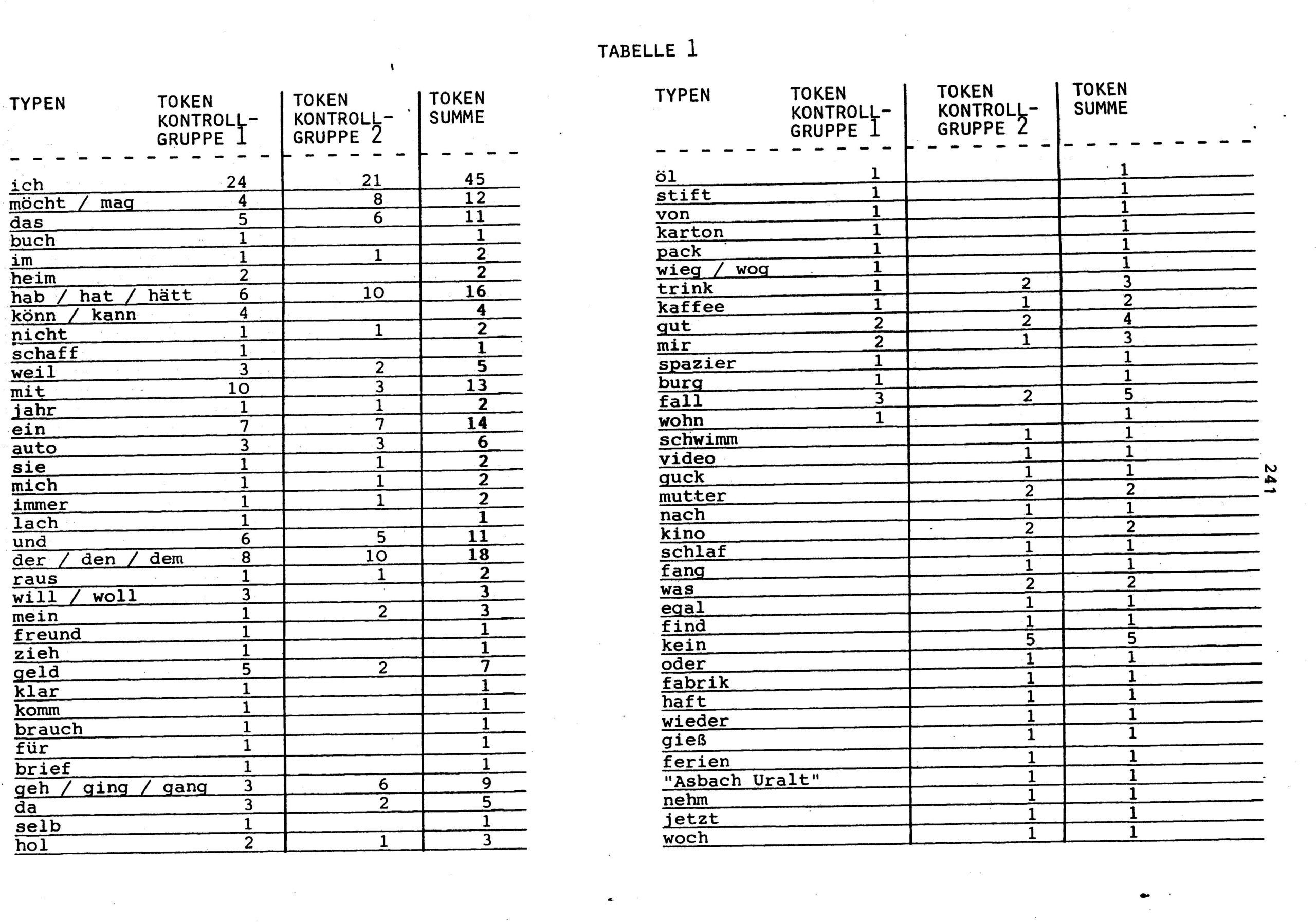

Even then, we spoke of tokens. (Excerpt from the teaching materials we created.)

Core Principles of the Segmenting Method

- Segmentation instead of letter isolation:

Words are broken down into recurring units such as “Haus-” (“house-”), “-tür” (“-door”), or “-licht” (“-light”).

Example: “Hauslicht” (“house light”) → “Haus-” + “-licht” (analogous to “Tageslicht”/“daylight”).

Goal: Rapid pattern recognition to accelerate reading and writing through association. - Contextual embedding:

Segments are taught in everyday situations (e.g., “Where else do you find -licht?” → “Mondlicht”/“moonlight,” “Kerzenlicht”/“candlelight”).

This promotes transferability and reduces cognitive load. - Participant orientation:

The segments come from the learners’ own language—similar to the language experience approach.

Learners identify patterns in self-created texts. - Visual support:

Color coding or symbols anchor the segments.

Example: All words with “-ung” (“-tion”/“-ing”) are marked in blue to highlight them as “noun-building blocks.” - Quick successes:

Through frequent segments (e.g., “ge-”/“pre-”, “-en”/“-ing”), learners decode entire word families—without analyzing every letter.

Advantages—Then and Now

- Efficiency: Faster learning success through pattern recognition.

- Motivation: Learners unlock word families and see progress.

Comparison: Segmenting Method (1985) vs. Modern Reading Methods (2026)

| Criterion | Segmenting Method (1985) | Modern Methods (2026) |

|---|---|---|

| Basic Approach | Hybrid: Segments + holism | Multimodal: Phonics, whole-word, morphemics + digital tools |

| Units | Meaning-bearing segments (e.g., “-ung”) | Morphemics (“word building blocks”) + syllable method |

| Technology | Manual segmentation, later databases | AI-supported platforms (e.g., “Antura,” “GraphoGame”) |

| Participant Orientation | Everyday language, self-created texts | Personalized learning via algorithms (e.g., “Duolingo ABC”) |

| Visual Aids | Color coding, symbols | Gamification (e.g., “Endless Alphabet”), augmented reality |

| Target Group | Adult illiterates | Inclusive approaches for all age groups |

| Scientific Basis | Practical experience, linguistic intuition | Neuroscience, long-term studies on reading fluency |

Current Trends Confirming the Segmenting Method

- Morphemic approaches are now standard (e.g., in German primary schools).

- My 1999 idea (European Patent ES2374881T3):

Using 1,000 core categories—similar to today’s “high-frequency word” lists. - AI-driven segmentation:

Tools like “GraphoGame” adaptively adjust learning paths—a principle we advocated early. - Language experience + technology:

Apps like “Speechify” convert speech to text and automatically mark segments. - Social context:

Modern methods emphasize collaborative learning (e.g., “literacy cafés”)—exactly like our approach.

Critique of Modern Methods

- Over-technologization: Some tools lose the human dialogue (à la Freire/Freinet).

- Cultural blind spots: Data-driven segmentation often ignores local contexts.

- Commercialization: Many apps are not freely accessible—our approach focused on open knowledge sharing.

Conclusion: Why This Approach Advances Society

The Segmenting Method was visionary because it:

- Anticipated hybridity (now standard in pedagogy),

- Emphasized participant orientation and contextualization (now rediscovered),

- Showed how socially relevant research drives innovation—without autocratic business models.

This example illustrates a central concern of the series The Real Trillion Euro Gap:

Digitization is not an end in itself.

It must be designed to be inclusive, participatory, and democratic—just like pre-digital research.

Where we fail to pay attention, we risk a digital autocracy serving the interests of a few—rather than a society that makes technology usable for all.

The question is not whether we can shape the future. It is whether we want to.

Everyone must—and everyone can—contribute to a livable society.

Are you afraid of a blackboard? No. So why be afraid to judge digitization?

Just like a blackboard, it is a tool!